Following on from my previous post, I wanted to try out the HADR functionality in SQL Server “Denali” CTP1.

To test the solution, I wanted to run SQL Server HADR in a virtual machine environment, with all the VMs running on one physical host.

This seemed like a pretty straightforward task at first. There were a number of blogs with step by step instructions, however none of them actually showed HADR running and I was soon to find out why. After following the steps and finding that it did not work, I did some digging around online and, thanks to the help of Justin Erickson at Microsoft, found that Books Online is unclear on the pre-requisites (and soon to be corrected). Essentially, it sounds like you can run HADR on a single node cluster and this is what the other blog posters, and myself initially, had done. This is not the case. HADR must run on a multi-node cluster. So here are the steps to set this up in a virtual machine environment:

Pre-requisites

Hardware (physical)

One machine running Windows Server 2008 R2 with enough memory to run three virtual machines (I had 8GB and this was sufficient)

Hardware (virtual)

One domain controller running Windows Server 2008 R2 which I have name DenaliDC.

Two domain joined Windows Server 2008 R2 VMs with SQL Server Denali installed. Note: These cannot be a copy of the same VHD or clustering will fail (and it will take you ages to work out why). I have named these DenaliOne and DenaliTwo.

Note: I had some problems connecting to the default instance in Management Studio and so I have used named instances DenaliOne\DenaliOne and DenaliTwo\DenaliTwo.

Virtual Network

I created five private virtual networks as follows:

This is a bit over the top, but virtual network cards are free, so why not?

Domain Controller Networking

I added two network cards on the DataOne and DataTwo networks. DenaliOne has a fixed IP address of 192.168.10.1 and DenaliTwo has a fixed IP address of 192.168.20.1.

I added DHCP with a scope of 192.168.10.10 – 192.168.10.20.

I added DNS and created a default forward lookup zone.

DenaliOne Networking

I added five network cards as follows:

Name: DataOne

IP Address: Obtain automatically

Name: DataTwo

IP Address: 192.168.20.11

Default gateway and preferred DNS Server: 192.168.10.1.

Name: iSCSIOne

Remove Client for Microsoft Networks, QoS Packet Scheduler, File and Printer Sharing for Microsoft Networks, IP Version 6

IP Address: 192.168.2.1

Register this connection’s address in DNS: Unchecked

Name: iSCSITwo

Remove Client for Microsoft Networks, QoS Packet Scheduler, File and Printer Sharing for Microsoft Networks, IP Version 6

IP Address: 192.168.3.1

Register this connection’s address in DNS: Unchecked

Name: Heartbeat

Remove Client for Microsoft Networks, QoS Packet Scheduler, File and Printer Sharing for Microsoft Networks, IP Version 4

IP Address: Obtain an IPv6 address automatically

Register this connection’s address in DNS: Unchecked

DenaliTwo Networking

I added five network cards as follows:

Name: DataOne

IP Address: Obtain automatically

Name: DataTwo

IP Address: 192.168.20.12

Default gateway and preferred DNS Server: 192.168.10.1.

Name: iSCSIOne

Remove Client for Microsoft Networks, QoS Packet Scheduler, File and Printer Sharing for Microsoft Networks, IP Version 6

IP Address: 192.168.2.2

Register this connection’s address in DNS: Unchecked

Name: iSCSITwo

Remove Client for Microsoft Networks, QoS Packet Scheduler, File and Printer Sharing for Microsoft Networks, IP Version 6

IP Address: 192.168.3.2

Register this connection’s address in DNS: Unchecked

Name: Heartbeat

Remove Client for Microsoft Networks, QoS Packet Scheduler, File and Printer Sharing for Microsoft Networks, IP Version 4

IP Address: Obtain an IPv6 address automatically

Register this connection’s address in DNS: Unchecked

Additional Steps

There is a slight problem with clustering in a virtual machine which is the inability to share a VHD. The way around this is to use a virtual iSCSI device. This involves either using Windows Storage Server or a product called Starwind. I hadn’t used either before and opted for a free trial of Starwind as there was less to download.

Starwind Setup

1. Download and install Starwind on the DC from here.

2. Add a host with an IP address of 127.0.0.1 and Port of 3261.

3. Double click the host and logon with a username of root and password of starwind.

4. Add a target with a suitable name (I used Quorum) and the following settings:

a. Storage type of Hard Disk

b. Device type of Basic Virtual and Image File device

c. Device creation method: Create new virtual disk (place it wherever you want with a .img extension)

d. Image File device parameters: Allow multiple concurrent iSCSI connections

e. Accept defaults for all other parameters.

5. You should end up with something like this:

6. Click on the host and then click Network in Server Settings. You should then see the IP Address and port that the host is using. In this case I will use 192.168.10.1 and 3260. Note down these values.

Install iSCSI Inititor

On both SQL Server VMs:

1. Install the iSCSI Initiator from here. There is also a Starwind version, but I used the Microsoft one.

2. Run iSCSI Initiator and click the Discovery tab.

3. Click Discover Portal and enter the values from the Starwind software that you noted earlier.

4. Now click the Targets tab and click Refresh.

5. Connect to the Starwind target.

6. Run the Disk Management console (Diskmgmt.msc) and you should see a new disk.

7. Bring this disk online in both virtual machines, but only format it from one VM (DenaliOne)

Add Windows Clustering

On both SQL Server boxes run Server Manager and add the Failover Clustering feature. After any reboots check that you can still see the iSCSI disk and if not run iSCSI Initiator and check that you are connected to the target. It is quicker to disconnect and connect again than wait for the automatic reconnection.

Follow these steps to build the cluster:

1. On DenaliOne run Failover Cluster Manager and in the Actions box click Create a Cluster.

2. Skip the introduction screen and add DenaliOne and DenaliTwo. They should appear with their FQDNs.

3. Click Next through all of the warnings until the Cluster Validation runs.

4. Resolve and Cluster Validation errors. Don’t worry about warnings for this example.

Install SQL Server HADR

1. On DenaliOne:

a. Create a folder for transferring logs. I created C:\HADRShare\Logs.

b. Share this folder.

2. On both SQL Server VMs:

a. Star SQL Server Configuration Manager.

b. Right click the SQL Server service and click Properties.

c. Click the SQL HADR tab.

d. Click Enable SQL HADR service and click OK.

e. Stop and Restart SQL Server.

3. On DenaliOne run SQL Server Management Studio.

a. Create a database (I called this SQLHADR). For simplicity with backup and restore, place the files in a directory that exists on both servers. I created C:\Databases\Data on both virtual machines and used this.

b. Create a table in this database and add a row to the table.

c. Backup this database and restore it on DenaliTwo with the RESTORE WITH NORECOVERY option.

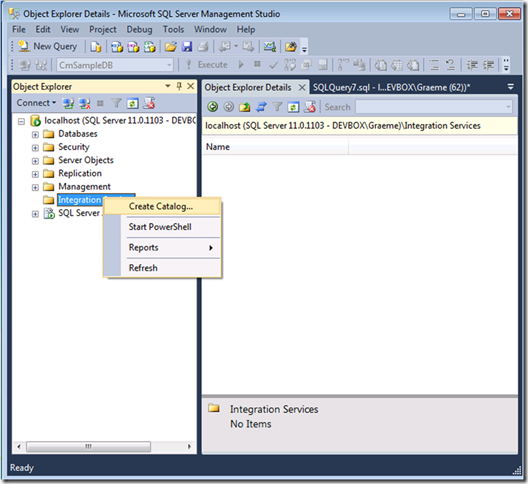

d. Expand Management and right click Availability Groups.

e. Click New Availability Group and click Next.

f. Enter a suitable name (I used SQLHADR) and click Next.

g. On the Select Databases page select SQLHADR and click Next.

h. Click Add and connect to DenaliTwo.

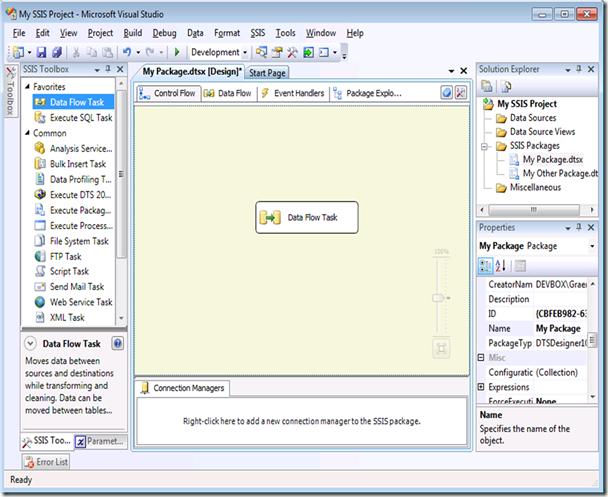

i. I want to use the secondary, or mirror, database to offload querying from the primary server. It is no longer necessary to create a snapshot and the querying happens with live data. To configure this change the read mode in Secondary Role to Allow Read Intent Connections and click Next:

j. Click Finish.

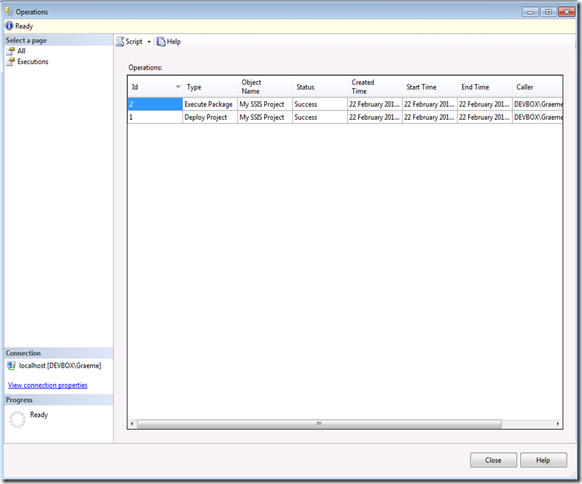

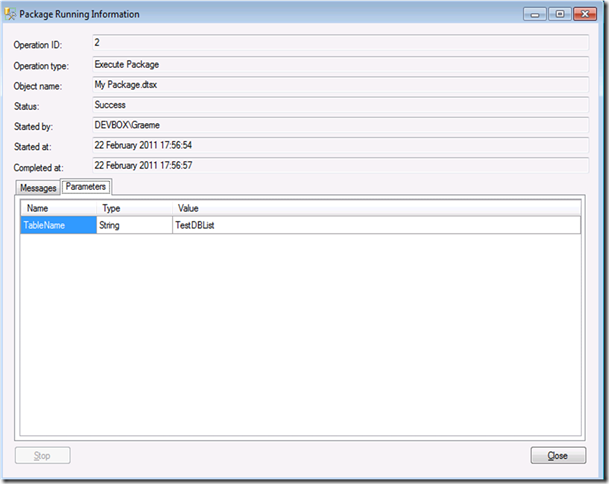

Start Data Synchronization

1. Click Start Data Synchronization.

2. Specify the share that you created previously and click Test.

3. Click OK.

4. Click Close.

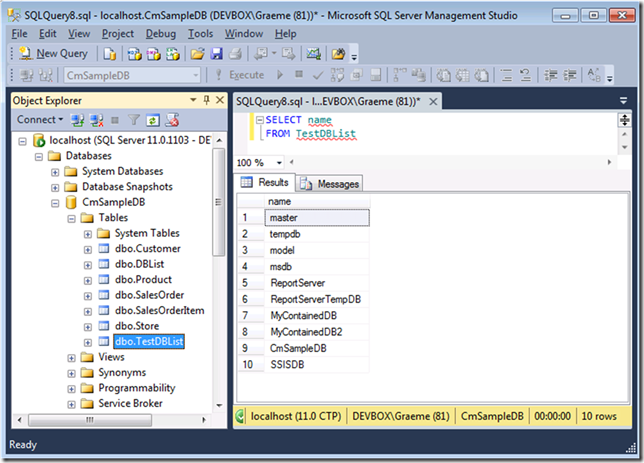

Querying the Secondary Instance

With SQL HADR you can now query the secondary database. Previously, you could not query the mirror. Now, as long as we allowed connections when we set up the availability group, we can offload queries from the primary database:

Now, with SQL Server HADR, we have a high availability solution providing a failover database, but we can also offload querying to this database and have a reduced workload on our primary server. We’ve come a long way since log-shipping!

Performing a Failover

1. In Management Studio on the DenaliTwo\DenaliTwo instance expand Management, expand Availability Groups, expand SQLHADR, expand Availability Replicas and right click DenaliTwo\DenaliTwo.

2. Click Force Failover.

3. Click OK.

Note: Do not try to failover the primary database. This will fail.

Notes

This wasn’t the most straightforward environment to setup, mostly due to inaccurate step by step instructions on other blogs (never trust a solution that doesn’t show it working at the end). The key things that I found were:

- You need a two node cluster. This requires a shared disk, although SQL Server doesn’t use this shared disk.

- For some reason, I had inconsistent problems with default instances. If this is the case, try a named instance.

- When you create the networks in Hyper-V, create one at a time and configure it in the VMs before creating the next network. This ensures that you know which network is which and helps with troubleshooting.

- If anything doesn’t work and you change the configuration, you must disable HADR on all instances, restart the instances, re-enable HADR and restart the instances again.

- I had HADR running on every instance on every server, although it now seems to work with only the mirrored instance having HADR enabled.

- Perform failovers on the secondary database, not the primary. This makes sense when you have multiple secondary databases because you will failover the new primary.