Pages

Thursday, December 31, 2009

CFP: Elsevier Library Connect Newsletter

In 2010, the second Library Connect Newsletter issue (8:2; April 2010) will focus on the theme "International AND Interdisciplinary."

Do you have something compelling to say on that topic? Is your library or university or research institution supporting international/interdisciplinary teams or customers and struggling with concomitant issues? If so, what best practices can you share, to help other information managers or specialists succeed in similar situations?

To propose an article or interview to appear in the Library Connect Newsletter, 8:2 (April 2010), please send a brief abstract and your name/title/institutional affiliation to libraryconnect@elsevier.com.

CFP: Internet Reference Services Quarterly

http://www.lib.jmu.edu/org/jwl/

seeks manuscripts for Volume 15 (2010). The journal covers all aspects of reference service provided via the Internet.

Why publish in IRSQ?

o Peer reviewed

o Four-week review process

o Editorial support for new authors

o Narrow scope focuses on web technologies as they relate to reference services

o Wide audience of all library types and disciplines – public, academic, special, humanities, science, etc.

More information for authors is available at the journal website - http://www.tandf.co.uk/journals/journal.asp?issn=1087-5301&subcategory=AH250000&linktype=44

Contact the editor with questions or to discuss your manuscript.

Manuscripts are accepted on a rolling basis. Manuscripts submitted by the following dates are likely to be included in the corresponding issue, assuming the manuscript clears the review process.

Vol 15 (2) February 4

Vol 15 (3) May 6

Vol 15 (4) August 17

Vol 16 (1) November 4

This journal is published by the Taylor & Francis Group, with offices in Philadelphia, London, and other countries. Request a free online issue at the journal website

http://www.tandf.co.uk/journals/journal.asp?issn=1087-5301&subcategory=AH250000

Brenda Reeb

Editor, Internet Reference Services Quarterly

Director, Business & Government Information Library

River Campus – Rhees 210

University of Rochester

Rochester, NY 14627

voice 585-275-8249

cell 585-414-0146

fax 585-273-5316

email brenda.reeb@rochester.edu

Wednesday, December 30, 2009

Yamaha NS-SP1800BL 5.1 Channel

Product Specifications

- Brand Name : Yamaha

- Color Name : Black

- Speaker Type : Home theater speaker system

- Driver Configuration : 1x 2.5" Cone

- Frequency Response Curve : 28 Hz - 50 kHz

- Audio Sensitivity : 82 decibels

- Impdedance : 6 ohm

- Cabinet Material Type : Plastic

- Speaker Driver Material Type : Paper

- Price : $149.95

Logitech Rechargeable Speaker S315i

A custom, full range driver brings you crisp, sharp sounds. And since it's rechargeable, this is a sleek and stylish speaker and goes with you wherever you go. You can play and charge your iPod or iPhone with a dock connector. The result is a product that makes the most of your music. Go ahead. Play a song or two or 300 without recharging.

Technical Details

- Rechargeable with up to 20 hours of listening pleasure (in the power saving model).

- Play and charge both iPod and iPhone.

- Weighs just 1.47 pounds, so it¿s easy to take with you.

- A 3.5 mm auxiliary input lets you connect and listen to other portable players.

- Fold-in foot makes it easy to take with you around town.

- Price : $114.00

Journal call for papers from The Electronic Library

New section in The Electronic Library

Section Co-editors: Dr Gillian Oliver and Professor G E Gorman Victoria University of Wellington, New Zealand

Digital preservation management and technology are two inter-related issues confronting all memory institutions: libraries, archives, galleries and museums. Such institutions are addressing very similar questions regarding the management of preservation activities and of preserved artefacts, as well as the technologies required to preserve, disseminate and access these artefacts. For many, this has been the unexpected consequence of rushing to reformat existing collections to enable digital accessibility. Resourcing issues (shortage of expertise, limited availability of funding) are forcing collaborative activity to an unprecedented degree between the distinctly different collecting paradigms represented by these institution types. As the functionality of web technologies and social media software increasingly influence the ways in which these institutions operate, the focus on DPMT, on collaboration between technologists and managers, and on inter-institutional collaboration will increase. It is therefore timely to consider devoting a significant section of an existing journal (The Electronic Library) to capture interest and research in this sector.

In time, Digital Preservation Management and Technology may become a full journal, the focus of which will be research in the broad field of digital preservation management and related technologies in this cross-sectoral domain, which includes academic, corporate, government, scientific and commercial contexts. It will address issues relating to the continuity of digital information, including digital objects, metadata and the context of their creation, management and use. It will encompass all purposes for which information is managed by the different occupational groups: as evidence, for accountability, for knowledge and awareness and for pleasure and entertainment. Coverage is intentionally international. The emphasis will be on research and conceptual papers in these fields.

Articles should be either conceptual papers or research papers in the region of 3000-6000 words.

All submissions will be double-blind peer reviewed. by members of the Editorial Advisory Board.

There will be an international Editorial Advisory Board whose specific task will be to double blind peer review submissions. The 20-30 Board members will be from North America, the UK, Australasia, Asia and elsewhere.

Submissions please, to 'Digital Preservation Management and Technology' at http://mc.manuscriptcentral.com/tel. Full information and guidance on using ScholarOne Manuscripts is available on The Electronic Library author guidelines page.

http://info.emeraldinsight.com/librarians/writing/calls.htm?PHPSESSID=e6hiscu7eneo7oe2o6sft6huv6&id=1998

Tuesday, December 29, 2009

Infrastructure Architect

There don’t seem to be any formal definition of what Infrastructure architecture is, the only one found by searching the web is from the Open Group where it is defined as:

Infrastructure architecture connotes the architecture of the low level hardware, networks, and system software (sometimes called "middleware") that supports the applications software and business systems of an enterprise.

The main thrust of this definition is to position Infrastructure Architecture relative to TOGAF and ends up as calling infrastructure architecture everything other than specific software project architectures which whilst not incorrect is a rather exclusive definition.

It would appear that there is not a formal definition of Infrastructure Architecture for IT so I have come up with my own:

Infrastructure Architecture is the set of abstractions used to model the set of basic computing elements which are common across an organization and the relationships between those elements.

So it would include the architecture of:

• Networks

• Storage

• Platforms

o Middleware

• Information

• Access Management

o Security

o Identity

• Management

o Deployment

o Provisioning

It does not include anything to do with design or operational processes or software design, these fall under the remit of SDLC, Operations Lifecycle and Software Architecture respectively. It does not include any business elements.It is a major subset of Enterprise Architecture and the platform for a Service Oriented Architecture. It does include Service Oriented Infrastructure and Grid.

The best post to explain it:

http://blogs.technet.com/michael_platt/archive/2005/08/16/409278.aspx

sAnTos

Sunday, December 27, 2009

Multi-Server Management with SQL Server 2008 R2

A key challenge for many medium to large businesses is the management of multiple database server instances across the organization. SQL Server has always had a pretty good story with regards to multi-server management through automated multi-server jobs, event forwarding, and the ability to manage multiple instances from a single administrative console. In SQL Server 2008, Microsoft introduced a new solution called Data Collector for gathering key server performance data and centralizing it in a management data warehouse; and in SQL Server 2008 R2, this technology underpins a new way to proactively manage server resources across the enterprise.

With SQL Server 2008 R2, database administrators can define a central utility control point (UCP) and then enroll SQL Server instances from across the organization to create a single, central dashboard view of server resource utilization based on policy settings that determine whether a particular resource is being over, under, or well utilized. So for example, a database administrator in an organization with multiple database servers can see at a glance whether or not overall storage and CPU resources across the entire organization are being utilized appropriately, and can drill-down into specific SQL Server instances where over or under utilization is occurring to identify where more resources are required (or where there is spare capacity).

Sounds pretty powerful, right? So you’d expect it to be complicated to set up and configure. However, as I hope to show in this article, it’s actually pretty straightforward. In SQL Server Management Studio, there’s a new tab named Utility Explorer, and a Getting Started window that includes shortcuts to wizards that you can use to set up a UCP and enroll additional server instances.

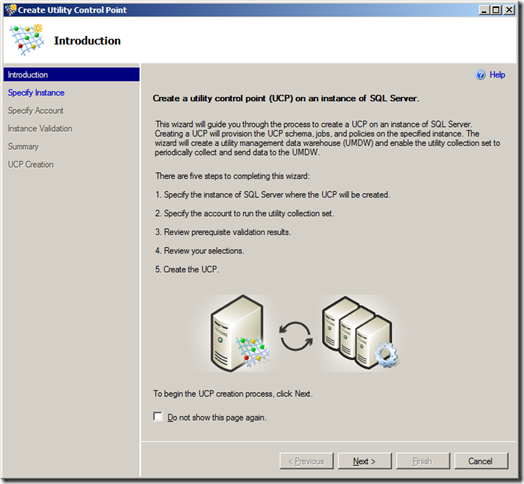

Clicking the Create a Utility Control Point link starts the following wizard:

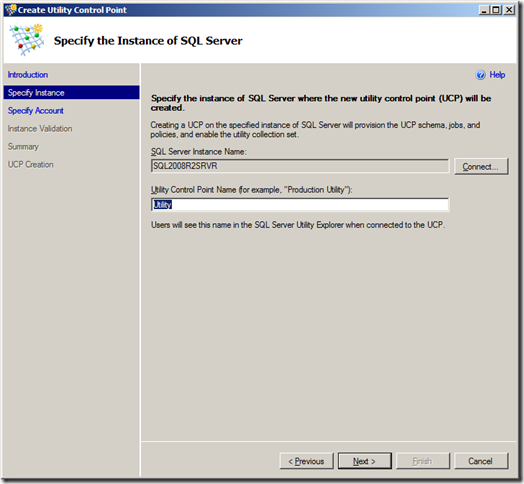

The first step is to specify the SQL Server instance that you want to designate as a UCP. This server instance will host the central system management data warehouse where the resource utilization and health data will be stored.

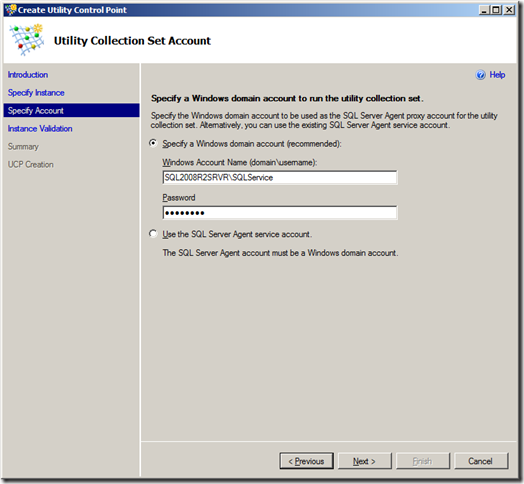

Next you need to specify the account that will be used to run the data collection process. This must be a domain account rather than a built-in system account (you can specify the account that the SQL Server Agent runs as, but again this must be a domain account).

Now the wizard runs a number of verification checks as shown here:

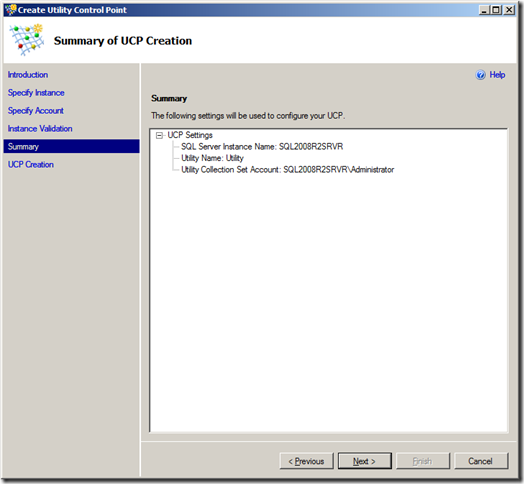

Assuming all of the verification checks succeed, you’re now ready to create the UCP.

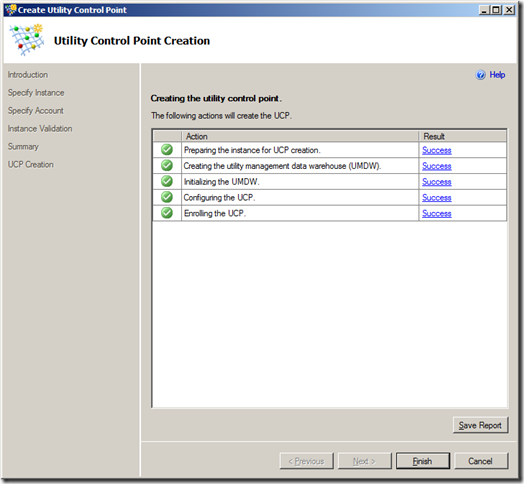

The wizard finally performs the tasks that are required to set up the UCP and create the management data warehouse.

After you’ve created the UCP, you can view the Utility Control Content window to see the overall health of all enrolled SQL Server instances. At this point, the only enrolled instance is the UCP instance itself, and unless you’ve waited for a considerable amount of time, there will be no data available. However, you can at least see the dashboard view and note that it shows the resource utilization levels for all managed instances and data-tier applications (another new concept in SQL Server 2008 R2 – think of them as the unit of deployment for a database application, including the database itself plus any server-level resources, such as logins, that it depends on).

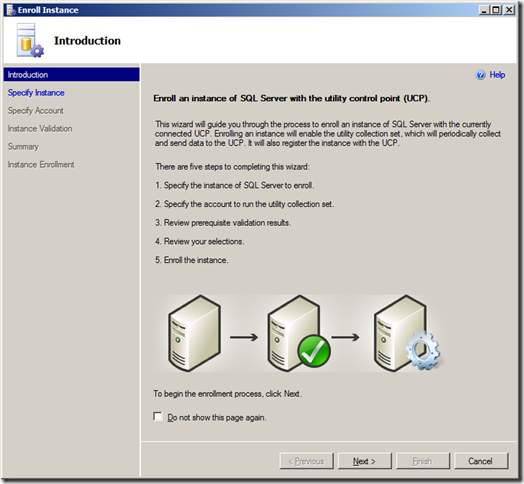

To enroll a SQL Server instance, you can go back to the Getting Started window and click Enroll Instances of SQL Server with a UCP. This starts the following wizard:

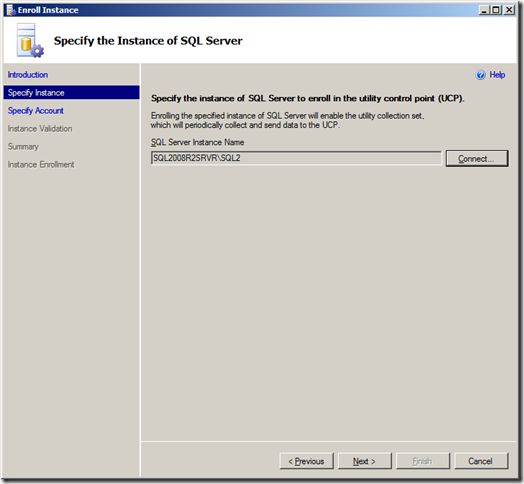

As before, the first step is the specify the instance you want to enroll. I’ve enrolled a named instance on the same physical server (actually, it’s a virtual server but that’s not really important!), but you can of course enroll any instance of SQL Server 2008 R2 in your organization (It’s quite likely that other versions of SQL Server will be supported in the final release, but in the November CTP only SQL Server 2008 R2 is supported).

As before, the wizard performs a number of validation checks.

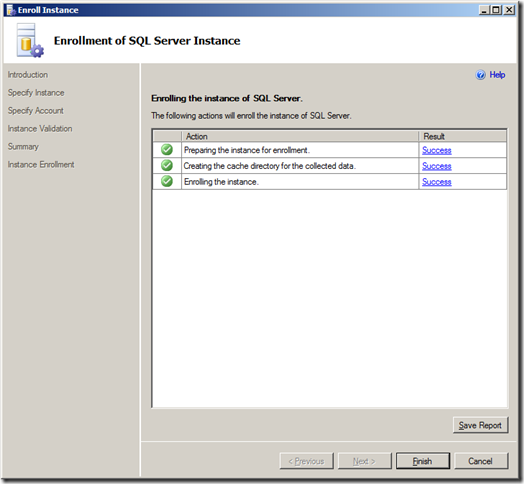

Then you’re ready to enroll the instance.

The wizard performs the necessary tasks, including setting up the collection set on the target instance.

When you’ve enrolled all of the instances you want to manage, you can view the overall database server resource health from a single dashboard.

In this case, I have enrolled two server instances (the UCP itself plus one other instance) and I’ve deliberately filled a test database. Additionally, the virtual machine on which I installed these instances has a small amount of available disk space. As a result, you can see that there is some over-utilization of database files and storage volumes in my “datacenter”. To troubleshoot this overutilization, and find the source of the problem, I can click the Managed Instances node in the Utility Explorer window and select any instances that show over (or under) utilization to get a more detailed view.

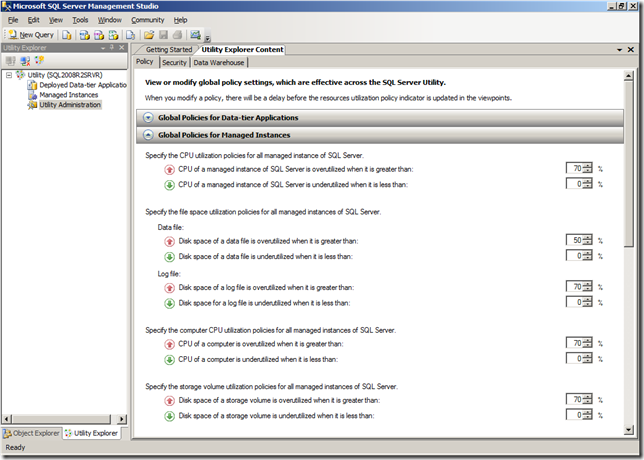

Of course, your definition of “over” or “under” utilized might differ from mine (or Microsoft’s!), you can configure the thresholds for the policies that are used to monitor resource utilization , along with how often the data is sampled and how many policy violations must occur in a specified period before the resource is reported as over/under utilized.

These policy settings are global, and therefore apply to all managed instances. You can set individual policy settings to override the global polices for specific instances, though that does add to the administrative workload and should probably be considered the exception rather than the rule.

My experiment with utility control point-based multi-server management was conducted with the November community technology preview (CTP), and I did encounter the odd problem with collector sets failing to upload data. However, assuming these kinks are ironed out in the final release (or were caused by some basic configuration error of my own!), this looks to be the natural evolution of the data collector that was introduced in SQL Server 2008, and should ease the administrative workload for many database administrators.

Thursday, December 24, 2009

Further Adventures in Spatial Data with SQL Server 2008 R2

Wow! Doesn’t time fly? In November last year I posted the first in a series of blog articles about spatial data in SQL Server 2008. Now here we are over a year later, and I’m working with the November CTP of SQL Server 2008 R2. R2 brings a wealth of enhancements and new features – particularly in the areas of multi-server manageability, data warehouse scalability, and self-service business intelligence. Among the new features that aren’t perhaps getting as much of the spotlight as they deserve, is the newly added support for including maps containing spatial data in SQL Server Reporting Services reports. This enables organizations that have taken advantage of the spatial data support in SQL Server 2008 to visualize that data in reports.

So, let’s take a look at a simple example of how you might create a report that includes spatial data in a map. I’ll base this example on the same Beanie Tracker application I created in the previous examples. To refresh your memory, this application tracks the voyages of a small stuffed bear named Beanie by storing photographs and geo-location data in a SQL Server 2008 database. You can download the script and supporting files you need to create and populate the database from here. The database includes the following two tables:

-- Create a table for photo records

CREATE TABLE Photos

([PhotoID] int IDENTITY PRIMARY KEY,

[Description] nvarchar(200),

[Photo] varbinary(max),

[Location] geography)

GO

-- Create a table to hold country data

CREATE TABLE Countries

(CountryID INT IDENTITY PRIMARY KEY,

CountryName nvarchar(255),

CountryShape geography)

GO

The data in the Photos table includes a Location field that stores the lat/long position where the photograph was taken as a geography point. The Countries table includes a CountryShape field that stores the outline of each country as a geography polygon. This enables me to use the following Transact-SQL query to retrieve the name, country shape, and number of times Beanie has had his photograph taken in each country:

SELECT CountryName,

CountryShape,

(SELECT COUNT(*)

FROM Photos p

WHERE (Location.STIntersects(c.CountryShape) = 1))

AS Visits

FROM Countries c

With the sample data in the database, this query produces the following results:

| CountryName | CountryShape | Visits |

| France | 0xE6100000 … (geography data in binary format) | 1 |

| Egypt | 0xE6100000 … (geography data in binary format) | 2 |

| Kenya | 0xE6100000 … (geography data in binary format) | 1 |

| Italy | 0xE6100000 … (geography data in binary format) | 2 |

| United States of America | 0xE6100000 … (geography data in binary format) | 7 |

| United Kingdom | 0xE6100000 … (geography data in binary format) | 2 |

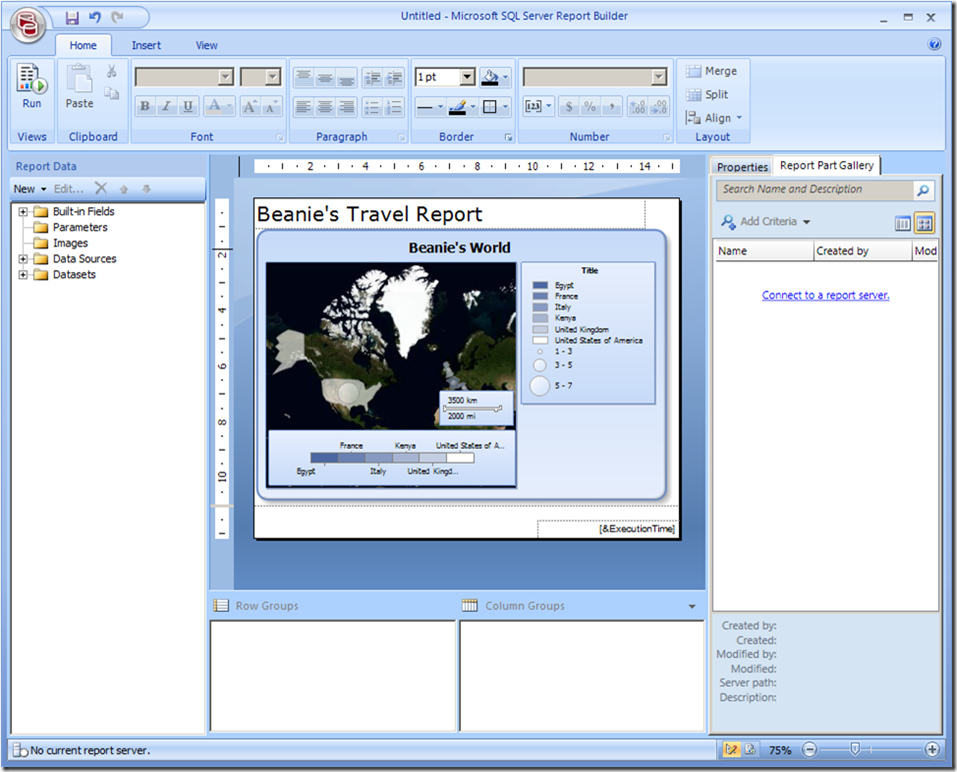

To display the results of this query graphically on a map, you can use SQL Server Business intelligence Development Studio or the new Report Builder 3.0 application that ships with SQL Server 2008 R2 Reporting Services. I’ll use Report Builder 3.0, which you can install by using Internet Explorer to browse to the Report Manager interface for the SQL Server 2008 R2 Reporting Services instance where you want to create the report (typically http://<servername>/reports) and clicking the Report Builder button.

When you first start Report Builder 3.0, the new report or dataset page is displayed as shown below (if not, you can start it by clicking New on the Report Builder’s main menu).

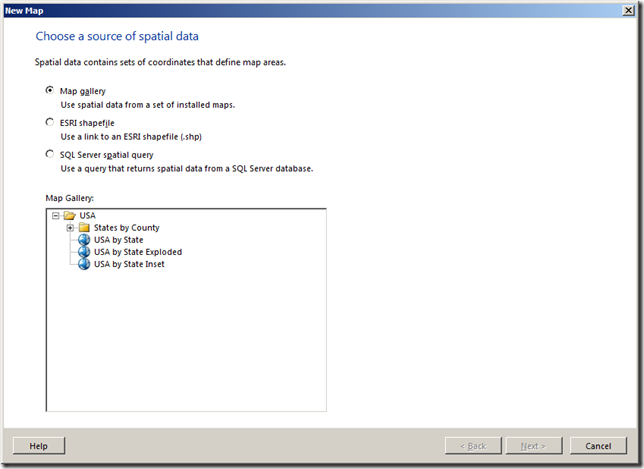

This page includes an option for the Map Wizard, which provides an easy way to create a report that includes geographic data. To start the wizard, select the Map Wizard option and click Create. This opens the following page:

SQL Server 2008 R2 Reporting Services comes with a pre-populated gallery of maps that you can use in your reports. Alternatively, you can import an Environmental Systems Research Institute (ESRI) shapefile, or you can so what I’m doing and use a query that returns spatial data from a SQL Server 2008 database.

After selecting SQL Server spatial query and clicking Next, you can choose an existing dataset or select the option to create a new one. Since I don’t have an existing dataset, I’ll select the option to Add a new dataset with SQL Server spatial data and click Next, and then create a new data source as shown here:

On the next screen of the wizard, you can choose an existing table, view, or stored procedure as the source of your data, or you can click Edit as Text to enter your own Transact-SQL query as I’ve done here:

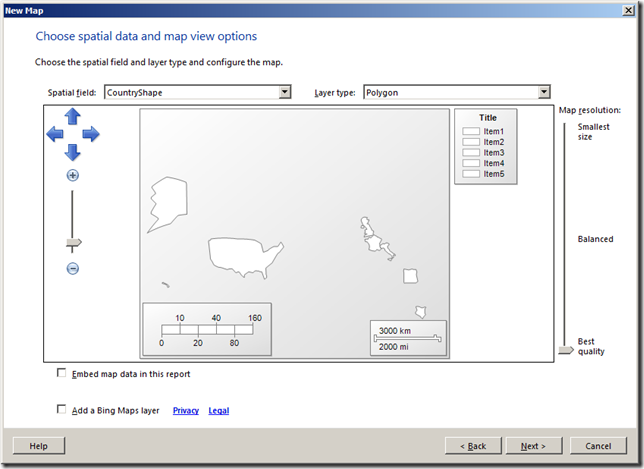

The next page enables you to select the spatial data field that you want to display, and provides a preview of the resulting map that will be included in the report.

Note that you can choose to embed the spatial data in the report, which increases the report size but ensures that the spatial map data is always available in the report. You can also add a Bing Maps layer, which enables you to “superimpose” your spatial and analytical data over Bing Maps tiles as shown here:

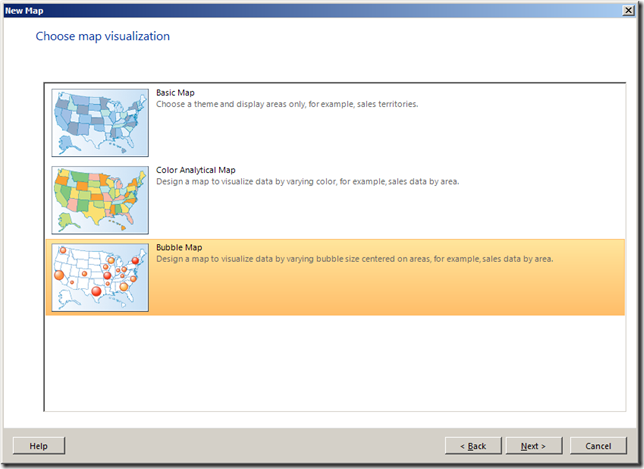

Next you can choose the type of map visualization you want to display. These include:

- Basic Map: A simple visual map that shows geographical areas, lines, and points.

- Color Analytical Map: a map in which different colors are used to indicate analytical data values (for example, you could use a color range to show sales by region in which more intense colors indicate higher sales)

- Bubble Map: A map in which the center point of each geographic object is shown as a bubble, the size or color of which indicates an analytical value.

To show the number of times Beanie has visited a country, I’m using a bubble map. Since the bubbles must be based on a data value, I must now choose the dataset that contains the values that determine the size of the bubbles.

Having chosen the dataset, I now get a confirm or chance to change the default matches that the wizard has detected.

Finally, you can choose a visual theme for the map and specify which analytical fields determine bubble size and the fill colors used for the spatial objects.

Clicking Finish, generates the report, which you can make further changes to with Report Builder.

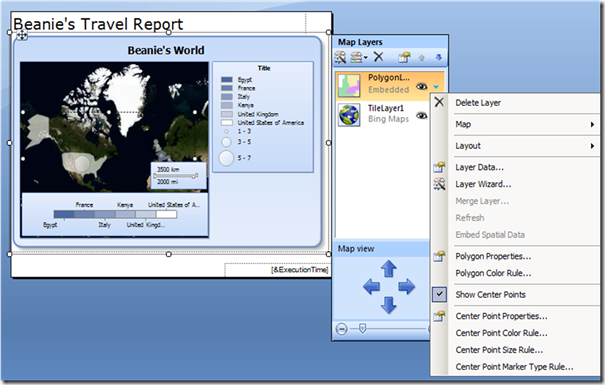

Selecting the map reveals a floating window that you can use to edit the map layers or move the area of the map that is visible in the map viewport (the rectangle in which the map is displayed).

You can make changes to the way the map and its analytical data are displayed by selecting the various options on the layer menus. For example, you can:

- Click Polygon Properties to specify a data value to be displayed as a tooltip for the spatial shapes on the map.

- Click Polygon Color Rule to change the rule used to determine the fill colors of the spatial shapes on the map.

- Click Center Point Properties to add labels to each center point “bubble” on the map.

- Click Center Point Color Rule to change the rule used to determine the color of the bubbles, including the scale of colors to use and how the values are distributed within that scale.

- Click Center Point Size Rule to change the rule used to determine the size of the bubbles, including the scale of sizes to use and how the values are distributed within that scale.

- Click Center Point Marker Type Rule to change the rule used to determine the shape or image of the bubbles, including a range of shapes or images to use and how the values are matched to shapes or images in that range.

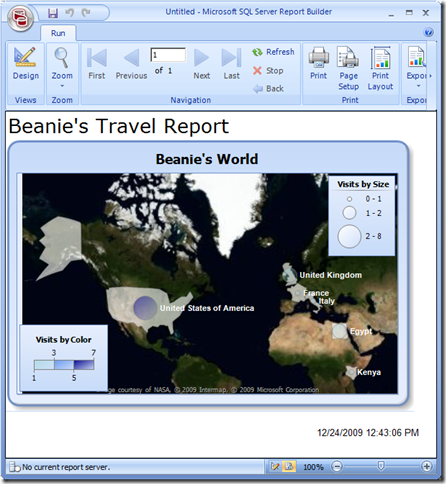

At any time, you can preview the report in Report builder by clicking Run. Here’s how my report looks when previewed.

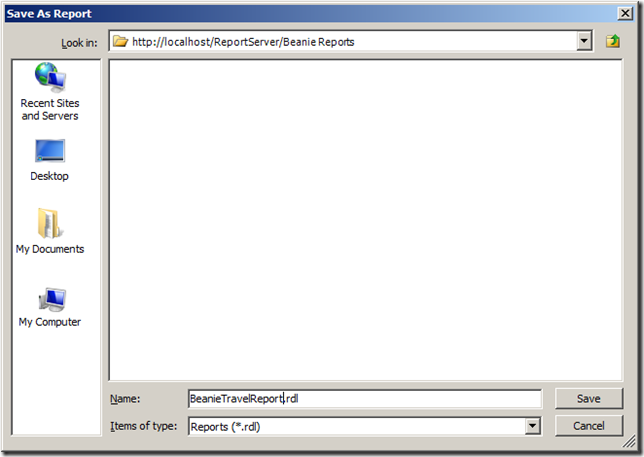

When you’re ready to publish the report to the report server, click Save on the main menu, and then click Recent Sites and Servers in the Save As Report dialog box to save the report to an appropriate folder on the report server.

After the report has been published, users can view it in their Web browser through the Report manager interface. here’s my published report:

I’ve only scratched the surface of what’s possible with the map visualization feature in SQL Server 2008 R2 Reporting Services. When combined with the spatial data support in SQL Server 2008 it really does provide a powerful way to deliver geographical analytics to business users, and hopefully you’ve seen from this article that it’s pretty easy to get up and running with spatial reporting.

Wednesday, December 23, 2009

host multiple domain SSL certificates with one ip address

Microsoft Office and Word Sales to be Banned

Way back in May, a patent infringement suit was filed by XML specialists i4i against Microsoft under the circumstances that Word's handling of.xml, .docx and .docm files was an infringement on i4i's patented XML handling algorithms. Although Microsoft did lose this case, the case against further sale of Microsoft Word was put on hold pending results of another appeal.

Unfortunately for Microsoft they lost again and it is expected that the company will appeal once more as well as submit a request for the injunction to be put on hold and taken to the Supreme Court or for Microsoft and i4i to settle. i4i isn't patent greedy or trying to tear down Microsoft or anything like that however.

i4i is a 30 person database design company which shipped one of the first ever XML plugins for Office. i4i is also credited with being responsible for revamping the whole USPTO database around XML so that it would be compatible with the 2000 version of Microsoft Word. The patents that i4i is suing over, surprisingly enough, do not cover XML itself. Instead they cover the specific algorithms used to read and write custom XML. This means all you OpenOffice users can breathe a sigh of relief because i4i stated that the law suit does not infringe. This is also good news for current Microsoft Office users because they, more likely than not, won't be affected. The suit is only intended to affect future sales of Office and Word.

Some more good news from Microsoft is that the company is working quickly to create versions of Microsoft Word 2007 and Microsoft Office 2007 that do not have the XML features, which Microsoft claims are "little-used", by the injunction date of January 11, 2010. Microsoft also stated that the beta version of Microsoft Office 2010, which is available for download, will not contain the technology covered in the suit. Microsoft is also considering another appeal so for now all we can do is wait and listen.

Looking for Computer / PC Rental information? Visit the www.rentacomputer.com PC Rental page for your short term business PC needs. Or see this link for a complete line of Personal Computer Rentals.

CFP: 2010 Michigan Library Association Annual Conference

November 10-12 - Traverse City, MI

http://www.mla.lib.mi.us/files/AC_ProposalSubmission20091217.pdf

CALL FOR PROGRAM PROPOSALS

Information and Guidelines

Submissions welcome through February 12, 2010

Michigan Library Association (MLA) is accepting program proposals for our 119th Annual Conference at Grand Traverse Resort and Spa, Traverse City - November 10, 11, and 12, 2010. Annual Conference is our premier programming and networking event with exceptional opportunities for library professionals to learn about trends and developments in the field, share experiences, exchange ideas with colleagues, and have a great time doing it.

The 2010 conference theme “Yes We Can!” is a reflection of the Michigan library community’s

determination to thrive in spite of the multi-faceted challenges we currently face. Yes we can innovate, collaborate, motivate, grow, diversify, succeed, and prosper! MLA seeks high quality programs to help libraries and library professionals succeed. The follow reflects Important Information along with a Submission Form for your reference and to guide the process. If

you need additional information, please contact Eva Davis, Chair or Denise Cook, MLA.

Call for Papers for Journal of Interlibrary Loan, Document Delivery & Electronic Reserve

The Routledge/Taylor & Francis peer-reviewed Journal of Interlibrary Loan, Document Delivery & Electronic Reserve (JILDDER) has merged with Resource Sharing & Information Networks and is now accepting articles for Spring and Summer 2010 publication. Of particular interest to JILDDER are articles regarding resource sharing, unmediated borrowing, electronic reserve, cooperative collection development, shared virtual library services, digitization projects and other multi-library collaborative efforts including the following topics:

• cooperative purchasing and shared collections

• consortial delivery systems

• shared storage facilities

• administration and leadership of interlibrary loan departments, networks, cooperatives, and consortia

• training, consulting and continuing education provided by consortia

• use of interlibrary loan statistics for book and periodical acquisitions, weeding and collection management

• selection and use of cutting-edge technologies and services used for interlibrary loan and electronic reserve, such as Ariel, Illiad, BlackBoard, Relais and other proprietary and open-source software

• copyright and permission issues concerning interlibrary loan and electronic reserve

• aspects of quality assurance, efficiency studies, best practices, library 2.0, the impact of Open WorldCat and Google Scholar, buy instead of borrow and practical practices addressing special problems of international interlibrary loan, international currency, payment problems, IFLA, and shipping

• interlibrary loan of specialized library materials such as music, media, CDs, DVDs, items from electronic subscriptions and legal materials

• special problems of medical, music, law, government and other unique types of libraries

• new opportunities in interlibrary loan and the enhancement of interlibrary loan as a specialization and career growth position in library organizations

Researchers and practitioners are invited to submit on or before December 30, 2009 for Spring publication or February 8, 2010 for Summer publication. For further details, instructions for authors and submission procedures please visit: http://www.informaworld.com/wild . Please send all submissions and questions to the Editor Rebecca Donlan at rdonlan@fgcu.edu

Editor-In-Chief:

Rebecca Donlan, Assistant Director, Collection Management

Florida Gulf Coast University

rdonlan@fgcu.edu

CFP: Open Repositories 2010 -- "The Grand Integration Challenge"

Repositories have been successfully established -- within and across institutions -- as a major source of digital information in a variety of environments such as research, education and cultural heritage. In a world of increasingly dispersed and

modularized digital services and content, it remains a grand challenge for the future to cross the borders between diverse poles:

- the web and the repository,

- knowledge and technology,

- wild and curated content,

- linked and isolated data,

- disciplinary and institutional systems,

- scholars and service providers,

- ad-hoc and long-term access,

- ubiquitous and personalized environments,

- the cloud and the desktop.

The Open Repositories Conference (6 to 9-JUL-2010 in Madrid, Spain) brings together individuals and organizations responsible for the conception, development, implementation, and management of digital repositories, as well as stakeholders who interact with them for achieving the widest possible integration in theoretical, practical, and strategic matters.

The program of papers, panel discussions, poster presentations, user groups, workshops, and tutorials will reflect the whole community of Open Repositories. Dedicated open source software community meetings for the major platforms (EPrints, DSpace and Fedora) will provide opportunities to advance and coordinate the

development of repository installations across the world.

Submission Process

* Conference papers *

We welcome two- to four-page proposals for presentations or panels that deal with theoretical, practical, or administrative issues of digital repositories. Abstracts of accepted papers will be made available through the conference's web site; all

presentations and related materials used in the program sessions will be deposited in the upcoming virtual conference proceeding of Open Repositories 2010.

* User Group Presentations *

Two- to four-page proposals for presentations or panels that focus on use of one of the major repository platforms (EPrints, DSpace and Fedora) are invited from developers, researchers, repository managers, administrators and practitioners describing novel experiences or developments in the construction and use of

repositories.

* Posters *

We invite developers, researchers, repository managers, administrators and practitioners to submit one-page proposals for posters.

* Workshops and Tutorials *

Proposals for workshops require a submission as well and can be accommodated before or after the main conference. For preparatory inquiries about workshop facilities, please contact the local team at alopezm@pas.uned.es.

PLEASE submit your paper through the conference system . The conference system will be linked from the conference web site http://or2010.fecyt.es/ and will be available for submissions as of January 15th, 2010.

The best-rated papers from the conference will be subsequently published in the Journal of Digital Information http://journals.tdl.org/jodi.

Important Dates and Contact Info

- 01-MAR-2010: Submission deadline (papers, user groups, posters, workshops, tutorials)

- 15-APR-2010: Notification of acceptance for conference presentations, workshops/tutorials

- 01-MAY-2010: Notification of acceptance for user group presentations and posters

- 06-JUL-2010: Conference start

--DSpace User Group Meeting Contact: Valorie Hollister

val@dspace.org

--Fedora User Group Meeting Contact: Thorny Staples

tstaples@duraspace.org

--EPrints User Group Meeting Chair: Les Carr

lac@ecs.soton.ac.uk

--Program Committee Chair: Wolfram Horstmann

whorstmann@uni-bielefeld.de

--Host Organizing Committee: Alicia Lopez Medina

alopezm@pas.uned.es

Conference Topics

The committee will consider any submission of sufficient quality and originality that relates to the field of Open Repositories. In the general track, preference will be given to submissions that relate to the theme of "The Grand Integration Challenge" as described in the introduction.

Please regard the following list as a selection of topics that indicates relevant fields.

- Data Curation / Data Archives

- Interoperability with Scholarly and Scientific Applications

- Interactions with Learning Environments

- Generic Workflows and Services

- Integrating Open Repositories with the Grid / Cloud

- Applications in Libraries/Archives

- Disciplinary Requirements

Do you have ideas that relate to different topics? Then please go ahead and address them in your submission!

Enjoy!

The Program Committee of Open Repositories 2010

CFP: special issue of Library Trends on Information Literacy

CALL FOR PAPERS - LIBRARY TRENDS: International Journal of the Graduate School of Library and Information Science, University of Illinois at Urbana-Champaign

Special Issue: Information literacy beyond the academy: towards policy formulation

Edited by Dr. John Crawford, Glasgow Caledonian University

Information literacy has not been chosen as a subject for an issue of Library Trends since 1991. The issue was heavily focused on the Higher Education sector. Since then research, development and practitioner activity has moved on and activity and research and development work around information literacy also takes place in career choice and management, employability training, skills development, workplace decision making, adult literacies training and community learning and development, public libraries, school and further education, lifelong learning and health and media literacies. Information literacy has matured sufficiently to have become a national and international policy issue as evidenced by President Obama’s proclamation and such international statements as the Prague Declaration of 2003.

Papers are invited from all information sectors and academia.

Proposals of no more than 300 words to be sent by 15 January 2010 to: John Crawford at jcr@gcal.ac.uk. In framing proposals intending authors may wish to be view author guidelines on the journal website at http://www.press.jhu.edu/journals/library_trends/guidelines.html

Call for Proposals for March 5 & 6 2010 Virtual Worlds and Libraries Online Conference

Virtual Worlds and Libraries Online Conference (from Lori Bell)

The American Library Association Virtual Communities and Libraries Member Initiative Group (ALA VCL MIG) invites librarians, library staff, vendors, graduate students, and developers to submit proposals for programs related to the topic of libraries in virtual worlds. The conference will be held online using OPAL (Online Programming for All Libraries) web conferencing software, with demonstrations and tours in virtual worlds such as Second Life. The conference dates are Friday and Saturday, March 5 and 6, 2010. Proposals are due Friday, January 15, 2010. Please send proposals to: lbell61520@gmail.com.

Topics:

The Virtual Worlds and Libraries online conference will feature interactive, live online sessions using OPAL web conferencing, with demonstrations and tours in virtual worlds such as Second life. We are interested in a broad range of submissions that highlight current, evolving and future issues and opportunities involving virtual worlds and libraries. These include but are not limited to the following themes:

• Starting a Library in Virtual Worlds

• How to Build a Library Presence in Second Life

• Working with a Class in Second Life

• Virtual World platforms for libraries

• The future for virtual environments and libraries

• Information Literacy in Virtual worlds

• Health information service in virtual worlds

• Museum and library collaboration in virtual worlds

• Library collaboration in virtual worlds

• Working with other campus agencies on virtual worlds

• Handheld library applications of virtual worlds

• Reference services in virtual worlds

Proposal Submissions:

This conference accepts proposals for presentations delivered in several online formats:

• A featured 45 minute presentation

• Panel discussion with others (10 minutes of presentation)

• Poster sessions

Submit proposals by completing the form beneath by no later than Friday, January 15, 2010. You will be notified by Monday, February 1 if your proposal has been accepted.

Presenters Are Expected To:

• Conduct an online session using OPAL web conferencing system, or provide a demonstration in a virtual world, such as Second Life

• Provide a photo, bio and program description for the conference website by February 10, 2010

• Respond to questions from attendees

• Attend an online 30-60 minute training on OPAL web conferencing

Please fill out the following information for your proposal.

Title of Session:

Description of Session: (100 words or less):

Speakers: (Please include real name and institution):

Program platform: OPAL or specify the virtual world you will be using:

Program Contact Information: (Name, institution, address, email address, phone number):

Thank you for considering a submission for conference participation. If you have questions, please contact:

• Lori Bell, Alliance Library System, lbell@alliancelibrarysystem.com

• Tom Peters, TAP Information Services, tpeters@tapinformation.com

• Rhonda Trueman, abbeyzen@gmail.com

Sign-up for Lexicon PCM Plug-In Bundle UK shipping notification

After meeting extremely favourable reviews at its AES introduction in October, Lexicon today began international shipping of the new PCM Native Reverb Plug-In Bundle. It will arrive in the UK early in January 2010, customers can follow this link to sign-up for an automatic email notification of UK shipping from UK distributor Sound Technology Ltd.

The newest addition to Lexicon’s legendary processing family provides seven Lexicon reverb algorithms that are designed to deliver the highest level of sonic quality and function while offering all the flexibility of native plug-ins.

As the ultimate studio reverb package for creating professional mixes within popular DAWs like Pro Tools, Logic, and Nuendo, the PCM Native Reverb Plug-In Bundle is one of the most highly anticipated introductions from Lexicon yet. It includes unique plug-ins for each reverb including: Vintage Plate, Plate, Hall, Room, Random Hall, Concert Hall, and Chamber, and comes complete with over 950 of the most versatile and finely crafted studio presets.

The PCM Native Reverb Plug-In Bundle is ideal for recording and post-production environments where unsurpassed quality is required. It is a fully functional cross-platform plug-in that is compatible with Windows XP, Vista, and 7 along with MAC OSX 10.4, 10.5, 10.6, Power PC and Intel based. The Bundle is Native only, and requires iLok authorization.

The PCM Native Reverb Plug-In Bundle will be available from authorized Lexicon dealers in the UK in January 2010 with a suggested retail price of £999 ex VAT.

UK shipping notification:

For full information and to register for an automatic email update of UK shipping please visit http://www.soundtech.co.uk/lexicon/pcm-native-reverb-plug-in-bundle/launch

Tuesday, December 22, 2009

Merry Xmas

A colleague sent me this picture from a Spanish company.

It is a xmas card from the WARP spanihs company. (IT company).

http://warp.es/

Thanks Nacho for sending me the xmas card, ;-) ;-)

Note: The formula is the greetings card.

WmiPrvSE memory leak. The computer 'localhost' failed to perform the requested operation because it is out of memory or disk space

The computer 'localhost' failed to perform the requested operation because it is out of memory or disk space.

I got these error.

The disk had 75 % free space.

So, the memory is the problem

I killed the WmiPrvSE. The processes had 700.000k.

After, I killed it, everything goes well and the processe started again with 6.000k.

The hyper-v manager started to work without problem.

You can solve the problem restarting the server of course. But the server was in a enviroment production.

sAnTos

Panasonic Pro AG-HMC150

Add to that, amazing low light performance, long record and battery time and professional Audio capabilities at a price that will make it an instant hit with a wide range of AV Shooters, Indie creators and Event video professionals. Panasonic Pro AG-HMC150 represents a major step forward in the introduction of a next generation solid state HD camera that extends the six year successful track record of the popular DVX100 plus a lot more.

Panasonic Pro AG-HMC150’s lightweight (lightest 1/3 inch 3CCD available), well balanced professional design features a high performance wide angle Leica 13X zoom lens, 24 and 30 frame progressive capture, both in 720 and 1080 formats, making it perfect for even high level projects. Designed from a clean sheet of paper with much customer input to Panasonic's product development engineers, Panasonic Pro AG-HMC150 sports a Die Cast Alloy chassis and a Three year warranty (upon customer registration) that further endorses its reliability.

Panasonic Pro AG-HMC150’s lightweight (lightest 1/3 inch 3CCD available), well balanced professional design features a high performance wide angle Leica 13X zoom lens, 24 and 30 frame progressive capture, both in 720 and 1080 formats, making it perfect for even high level projects. Designed from a clean sheet of paper with much customer input to Panasonic's product development engineers, Panasonic Pro AG-HMC150 sports a Die Cast Alloy chassis and a Three year warranty (upon customer registration) that further endorses its reliability.Using the latest in compression technology (AVC High Profile) and widely available SD memory cards as the recording media, Panasonic Pro AG-HMC150 is as easy to use as a digital still camera. The content recorded on the SD card can be directly played on a growing number of affordable consumer players, including select models of Playstation 3, Blu-ray players, plasma screens and PC’s. With most NLE systems now supporting AVCHD, content can be edited and rendered to play in any type of SD or HD playback system.

Dynamic Range Stretch

With DRS ON your customer can capture better Video quality when shooting bright, halftone and dark objects in the same frame. (Bride’s white gown details and the Groom’s shades of tuxedo black.) This clever circuit estimates the gamma curve and knee slope of each pixel’s brightness and applies the estimate on a real time basis. The result is more accurate Video with a visually wider dynamic range.

Features

- Full range of HD formats : 1080/60i, 1080/30p, 1080/24p (Native); 720/60p, 720/30p, 720/24p (Native)

- Higher bit-rate recording than consumer models (21 Mbps PH Mode)

- Three latest design 1/3 inch CCD Progressive Imagers

- 13X Wide angle 28mm lens out of the box (35mm equiv.), MOD .6 M, 72mm Ø (Shoot in confined spaces with no need to buy an accessory lens)

- Time Date Stamp for Legal Depositions or surveillance

- Waveform Monitor, Vectorscope plus two Focus displays for accurate, quick focus

- Professional XLR audio input connections

- Time code (DF, NDF, REC RUN, FREE RUN) and USER BIT

- HDMI out, Component Out (mini D terminal), Composite Video Out and RCA Audio Out jacks

- External Time Code Link (Slave & Master Preset) uses the Composite Video Out terminal

- USB 2.0 for file transfer (no need for a VTR)

- 3.5 inch LCD monitor displays thumbnails for quick non-linear access to clips

- Remote control connection for Zoom, Focus, Iris, and start and stop functions

- 14 bit A to D converter and 19 bit Image processing

- Cinelike Gamma &, DRS Dynamic Range Stretch (Filmaker requested features)

- Three Neutral Density Filters 1/4, 1/16, 1/64

- Pre-Record (3 Seconds), Digital Zoom 2X / 5X / 10X (in 1080/60i & 720/60p only)

- Three User Set Buttons with 11 choices for customizing Camera to Shooter

Panasonic Professional AG-HMC40 AVCHD

With a full resolution 3 megapixel, 1/4 inch 3MOS imager, Panasonic Professional AG-HMC40 AVCHD produces stunning 1920 x 1080 video in AVCHD (MPEG-4 AVC/H.264), delivering images far superior to HDV. When used for digital still photography, the camera captures photos with 10.6 megapixel resolution directly onto the SD card as a JPEG image. The camera can also be connected directly to a PictBridge photo printer (no PC required).

And unlike HDV tapes, video and photos can be accessed randomly and immediately from the SD cards and played back on a number of consumer devices. With a full resolution 3 megapixel, 1/4 inch 3MOS imager, Panasonic Professional AG-HMC40 AVCHD produces stunning 1920x1080 video in AVCHD (MPEG-4 AVC/H.264), delivering images far superior to HDV. When used for digital still photography, the camera captures photos with 10.6 megapixel resolution directly onto the SD card as a JPEG image.

And unlike HDV tapes, video and photos can be accessed randomly and immediately from the SD cards and played back on a number of consumer devices. With a full resolution 3 megapixel, 1/4 inch 3MOS imager, Panasonic Professional AG-HMC40 AVCHD produces stunning 1920x1080 video in AVCHD (MPEG-4 AVC/H.264), delivering images far superior to HDV. When used for digital still photography, the camera captures photos with 10.6 megapixel resolution directly onto the SD card as a JPEG image.The camera can also be connected directly to a PictBridge photo printer (no PC required). And unlike HDV tapes, video and photos can be accessed randomly and immediately from the SD cards and played back on a number of consumer devices. The camcorder's advanced Leica Dicomar lens system offers 12X optical zoom, wide angle setting (40.8mm) and an optical image stabilizer (O.I.S.) feature for precise shooting. The compact camera is also packed with professional video and audio features (HDMI out, date or time stamp, remote zoom, XLR option, etc.).

Using high capacity SD memory cards, Panasonic Professional AG-HMC40 AVCHD provides hours of beautiful high definition recordings at professional level bit rates. It records in a range of 1080 and 720 formats with all four professional AVCCAM recording modes PH mode (average 21 Mbps or Max 24Mbps), the HA mode (approx.17 Mbps), the HG mode (approx.13 Mbps), HE mode (approx. 6 Mbps). AVCCAM offers the benefit of a fast, file based workflow using widely available and reasonably priced SD memory cards.

Technical Details

- HD formats : 1080/60i, 1080/30p, 1080/24p (Native) 720/60p, 720/30p, 720/24p (Native)

- Three latest design 1/4.1 Progressive 3MOS Imagers for full HD resolution

- Long record time : 3 hours with included battery (7 hours continuous with 5.800mAh Battery)

- Touch Panel 2.7 inch Widescreen LCD displays Thumbnails & Audio Metering

- HDMI out, Component Out (mini D terminal), Composite Video and Stereo Audio Out with included cable

Sony HVR-HD1000U

A built-in down converter creates DV material, perfect for standard DVD productions. Plus, a special still photo mode is ideal for producing DVD cases and making wedding photo albums. Whether you are recording weddings and corporate communications or helping students make a documentary, Sony HVR-HD1000U is the best choice on the market today as an entry level professional camcorder.

The next generation of Sony imaging sensor, the ClearVid CMOS Sensor used in Sony HVR-HD1000U camcorder, is quite unique and different from current CMOS technology. The ClearVid CMOS Sensor uses a unique pixel layout rotated 45 degrees to provide high resolution and high sensitivity. This pixel layout technology is also used in higher end professional camcorders. The ClearVid CMOS Sensor, coupled with an Enhanced Imaging Processor (EIP), generates stunning images. Moreover, thanks to the CMOS technology, bright objects do not cause vertical smear.

The next generation of Sony imaging sensor, the ClearVid CMOS Sensor used in Sony HVR-HD1000U camcorder, is quite unique and different from current CMOS technology. The ClearVid CMOS Sensor uses a unique pixel layout rotated 45 degrees to provide high resolution and high sensitivity. This pixel layout technology is also used in higher end professional camcorders. The ClearVid CMOS Sensor, coupled with an Enhanced Imaging Processor (EIP), generates stunning images. Moreover, thanks to the CMOS technology, bright objects do not cause vertical smear.Sony HVR-HD1000U offers benefits for SD productions, as well as HD. It is easy to use HDV recordings for your current DV editing work. Sony HVR-HD1000U has a down conversion feature that outputs converted DV signals through the i.LINK connector to your current DV non-linear editing system, while retaining an HD master on the tape for future use. Furthermore, Sony HVR-HD1000U offers a DV recording mode (4:3 or 16:9), which can provide a recording time of approximately 120 minutes in LP mode.

Technical Details

- Enhanced mobility and professional aesthetic with this shoulder mount design and black matte body

- HDV1080i recordings can be captured on DigitalMaster professional tape as well as consumer MiniDV

- Built-in down converter creates DV material, perfect for standard DVD productions

- Easy viewing with this large, freely rotating 2.7 inch LCD screen

- Features a Carl Zeiss Vario Sonner T lens with 10x optical zoom

Sony HDR-FX7

So no matter what the occasion, Sony HDR-FX7 is the perfect camcorder for the situation. A Sony developed Real Time MPEG Encode/Decode system with reduced energy consumption and compact size to fit inside a personal camcorder. This provides efficient MPEG2 compression, and recording and playback of clear HD images at the same bit rate of the DV format, so that High Definition video can be recorded on the same cassettes as are used for MiniDV recording.

From the authority in lens technology, the Carl Zeiss Vario Sonnar T lens provides a high quality 20x optical zoom which maintains image clarity and color while reducing glare and flare. Dual independent zoom and focus rings provide precise and detailed control over the amount of zoom and the overall focus of the image with just a turn of the rings. Fast, intuitive framing when zooming, and finely detailed focusing is easy with the natural "feel" of the rings.

From the authority in lens technology, the Carl Zeiss Vario Sonnar T lens provides a high quality 20x optical zoom which maintains image clarity and color while reducing glare and flare. Dual independent zoom and focus rings provide precise and detailed control over the amount of zoom and the overall focus of the image with just a turn of the rings. Fast, intuitive framing when zooming, and finely detailed focusing is easy with the natural "feel" of the rings.Easily adjust the amount of light entering the lens by adjusting exposure brightness in accordance with the iris and gain. The Iris control allows the volume of light to be adjusted (shutter speed and gain are adjustable). Though not small enough to carry with you to Disney, this camcorder opens up new opportunities for serious amateur and semi professionals to record videos suitable for play on new widescreen HDTV sets.

Technical Details

- Record and play back HDV 1080i video : switchable recording in standard definition

- ClearVid CMOS sensor : 20x optical zoom

- Wide 3.5 inch Hybrid Touch Panel Clear Photo LCD Plus display

- Capture 1.2 megapixel stills to Memory Stick Duo

- Professional 62mm Carl Zeiss Vario Sonnar T lens

Canon EOS 7D

In addition to a body only version, Canon sells Canon EOS 7D in a kit with the 28-135mm f3.5-5.6 IS lens (44.8-216mm equivalent). One of the heavier single grip dSLRs available, there are no radical design departures in Canon EOS 7D but there are tons of subtle, and a few conspicuous, interface changes that greatly enhance the fluidity of the camera's operation. The new viewfinder is great, comparable with that of the D300s big and bright, with an optional overlay grid.

It's also slightly more comfortable than the D300s' because of the larger eyecup. Adding to its traditional array of buttons for metering, white balance, autofocus, drive mode, ISO sensitivity, and flash compensation Canon EOS 7D now includes an M-Fn button used to cycle through the AF point options, plus Canon brings the LCD illumination button into action for registering the orientation linked AF points. Unfortunately, the buttons are very difficult to differentiate by feel, and the M-Fn and illumination buttons are even smaller and harder to use than the others.

It's also slightly more comfortable than the D300s' because of the larger eyecup. Adding to its traditional array of buttons for metering, white balance, autofocus, drive mode, ISO sensitivity, and flash compensation Canon EOS 7D now includes an M-Fn button used to cycle through the AF point options, plus Canon brings the LCD illumination button into action for registering the orientation linked AF points. Unfortunately, the buttons are very difficult to differentiate by feel, and the M-Fn and illumination buttons are even smaller and harder to use than the others.Following trends in consumer dSLR design, Canon EOS 7D now also has an interactive control panel for changing frequently accessed settings, called up with the Q button. Canon went from very few AF options to a gazillion in one model. Of course, there's the veteran full automatic AF selection. Spot AF is a subarea of the traditional single point AF, and for both of these you can choose from any of the 19 AF points. AF point expansion uses the three or four (depending upon location) points surrounding the chosen one.

Zone AF is similar to AF point expansion in that it allows you to define clumps of points in the center, top, bottom, or sides of the full AF area, but in contrast to expansion, where you still choose the primary focus point and it only uses the other points if the subject moves, the camera automatically chooses points from within the defined zone. The bulk of these are really designed to improve focus tracking during continuous shooting, and, much like Nikon's AF system, you have to think very carefully about matching the AF choice with the shooting situation or you can end up with surprising results.

Ditto for the flexible global and lens specific micro-adjustment tools, which it carries over from the higher end models. Very few users need all of these options, and Canon provides a solid interface for enabling or disabling the choices to minimize on the fly confusion. In Live View mode you have three AF options Live mode (contrast AF), face detect Live mode AF, or Quick AF (the "traditional" faster Live View AF, which uses the faster phase detection scheme but requires more mirror flipping).

Monday, December 21, 2009

Call for Presenters: ACRL New Members Discussion Group

The ACRL New Members Discussion Group invites the submission of proposals for

presentation at its meeting at the 2010 ALA Midwinter Conference in Boston, MA

on Saturday, January 16, 2010.

Proposals are due by Monday 12/28/2009.

The ACRL New Members Discussion Group is for new (and aspiring) academic

librarians. We meet twice a year–at both ALA conferences–to chat about

whatever is on our minds. It’s an opportunity for networking and a friendly

place to ask any questions you have about succeeding in ACRL. Presenters at

this meeting have the opportunity to contribute to the professional development

of other academic librarians, gain conference presentation experience, and

build their CV. Students are welcome to submit proposals.

This Midwinter conference we want to hear from you on themes relating to

Incorporating Technology Tools in Library Instruction. How do you perceive the

role of technology in library instruction and how do you handle teaching about

technology? We are interested in presentations that share personal experiences

with incorporating technology tools, such as customized browser toolbars,

screencasting, citation management software, and podcasting into library

instruction. The goal of these presentations is to familiarize new and aspiring

academic librarians with effective uses of these tools and effective methods of

teaching about technology topics. We seek proposals for presentations that

address this topic from a variety of angles, including (but not limited to):

-Examples of effective uses of technology tools in library instruction, either

as a means for delivering instruction (for example, creating screencasts about

citation management software), or as the topic of instruction (for example,

delivering library instruction sessions that teach students how to use tools

such as podcasting or citation management software)

-How to use technology tools to meet specific learning outcomes

-Successful strategies for promoting library instruction sessions that focus on

technology

The ACRL New Members Discussion Group meeting will take place on Saturday

January 16, 2010, from 10:30 a.m. to 12:00 p.m. at the Westin Copley Place

Essex Center. Presenters should plan to speak for 10 minutes and allow 5

minutes for questions/discussion. There will be three presentations. Following

the presentations, we will open the floor for discussion on the topic, or we

can answer your questions about getting involved in national activities and/or

academic librarianship in general.

Proposals are due by Monday 12/28/2009. Notification of acceptance will be made

by Tuesday 01/05/2010. Please include the following information in your

proposal:

1. A cover sheet with your name, title, institutional affiliation (or LIS

program), mailing address, phone number, and email address.

2. A second sheet that contains no identifying information and includes the

title and a 200-300 word description of your presentation. The description

should clearly identify the topic of your presentation, your personal

experience with this topic, and how your presentation will contribute to new

and aspiring librarians’ understanding of how to incorporate technology tools

in library instruction.

3. Keep in mind that there will be no use of technology for these

presentations. If your proposal is accepted, you should plan to provide

handouts that contain tips, further reading, etc.

Please submit proposal by email to Allie Flanary (ACRL NMDG convener) at

aflanary@gmail.com.

2010 LITA National Forum Call for Proposals

Due Date for proposals: February 19, 2010

The 2010 National Forum Committee seeks proposals for high quality concurrent sessions, preconferences and poster sessions for the 13th annual LITA National Forum to be held in Atlanta GA, September 30 - October 3, 2010.

Theme: The Cloud and the Crowd

The Forum Committee is interested in presentations about projects, plans, or discoveries in areas of library-related technology involving emerging cloud technologies, software-as-service, as well as social technologies of various kinds. We are interested in presentations from all types of libraries: public, government, school, academic, special, and corporate. Proposals on any aspect of library and information technology are welcome. Some possible ideas for proposals might include:

• Using virtualized or cloud resources for storage or computing in libraries

• Library-specific open source software (OSS) and other OSS "in" Libraries, technology on a budget

* Crowdsourcing and user groups for supporting technology projects

* Semantic Web

* Training via the crowd

* Social Computing: social tools, collaborative software, etc.

* Engaging your "crowd"

* User created content: Book reviews, tagging, etc.

* Virtual worlds

* Federated and Meta-Searching: design and management, integrated access to resources, search engines

* Digital Libraries/ Institutional Repositories: developments in resource linking, preservation, maintenance, web services

* Harnessing the crowd data to improve the user experience

* Security in the cloud: control vs flexibility, legal implications

* Authentication and Authorization: Digital Rights Management (DRM), authentication, privacy, services for remote patrons

* Web design: information architecture, activity-centered design, user-centered design, usability testing

* Technology Management: project management, geek management, budgeting, knowledge sharing applications

* Globalization and library services - does it matter where your staff or users are?

Presentations must have a technological focus and pertain to libraries and/or be of interest to librarians. Concurrent sessions are approximately 70 minutes in length and sessions of all varieties are welcomed from traditional single- or multi-speaker formats to panel discussions, case studies, and demonstrations of projects. Forum 2009 will also accept a limited number of poster session proposals. For projects that will still be in preliminary development in October 2010, we recommend presentations at a lightning talk or other "un-conference"-like activities for which time will be reserved at Forum. A call for these types of presentations and discussions will be issued after February 2010.

New this year:

1. In response to attendee feedback, this year we will be offering "half-session" slots as well as full sessions. This is designed for speakers who do not wish to use the full 75 minutes, but who do not have a partner in mind for sharing the time. The Committee will pair these half-sessions up so that the timing of the Forum remains organized. Please indicate in your proposal whether you are requesting a full or half session. Half sessions should plan on approximately 30 minutes speaking time to allow both speakers time to set up and for Q&A. If you are requesting a full session, you should be prepared to use most of the allotted time.

2. If you are interested in publishing a paper based on your talk in ITAL, you will have the opportunity to indicate that. These proposals will be shared with the ITAL editor.

Presenters are required to submit draft presentation slides and/or handouts in advance for inclusion on the ALA Connect site, and are required to submit final presentation slides or electronic content (video, audio, etc.) to be made available on the Web site after the event.

Your proposals are welcomed and much appreciated! To submit a proposal, enter the following information online at http://docs.lib.purdue.edu/lita2010/

* Title

* Summary (to be used in community feedback/voting: a one-sentence description of your presentation mentioning neither the name(s) or institution(s) of the presenter(s), max. 200 characters)

* Abstract and brief outline (max 400 words)

* Level indicator (basic, intermediate, or advanced)

* Brief biographical information. Include experience as a presenter and expertise in the topic

* Full contact information

* Is this proposal for a preconference? Concurrent session? Poster session?

* If this proposal is for a concurrent session, might it be considered for a poster session?

* If this proposal is for a concurrent session, might it be expanded into a half-day or full-day preconference?

* If this proposal is for a concurrent session, are you requesting a full or half session?

* How did you hear about the 2010 Forum call for proposals?

Submit proposals by February 19, 2010 online at: http://docs.lib.purdue.edu/lita2010/

The 2010 Forum Planning Committee will review proposals starting in February 2010. You will be contacted about the status of your proposal by the end of March.

Questions? Contact the LITA Office:

lita@ala.org

Library and Information Technology Association (LITA) members are information technology professionals dedicated to educating, serving, and reaching out to the entire library and information community. LITA is a division of the American Library Association.